Last week I wrote about how significant improvement was the proposed Journalist rating system is to improve news in general.

Upon further reflection, I think the rating system is not going to solve anything only create more confusion, and we have to think of a better way.

So what a rating system is doing wrong?

Human beings have biases that are inherent. Even when we are aware of them, often we cannot escape them.

For example, The psychological difference between an item at $2.99 and another at $3.00 is bigger than the difference between $3.60 and $3.59 because the left-most digit matters most. ( the left digit bias)

Most of us know about it but still cannot help being affected by it when shopping.

There are 180+ of the observed and recorded human biases. There could be many more that our brains are utterly incapable of comprehending.

Which means, each one of us perceives the world with our unique and different and deeply flawed lenses but cannot see the lenses itself.

Here is a chart with some of the known biases.

A rating system has this inherent assumption and a fundamental flaw:

1) It assumes that all journalists are doing things intentionally and exploiting human biases using sophisticated techniques. – they are not any smarter than the average human being, have their own biases, and affiliations.

2) A rating system uses other humans who have their prejudices and biases to rate the work of someone who is no better than them and incapable of overcoming their biases.

It looks like trying to improve a painting by handing out brushes with different colors to random people and asking them to make strokes on the canvas randomly.

So what could we do better?

What if we build an AI tool that can be used by the journalists and the readers that detect and highlights biases, possible prejudices and possible misrepresentations of facts that points out various biases and possible bais traps to both the journalists and to the readers?

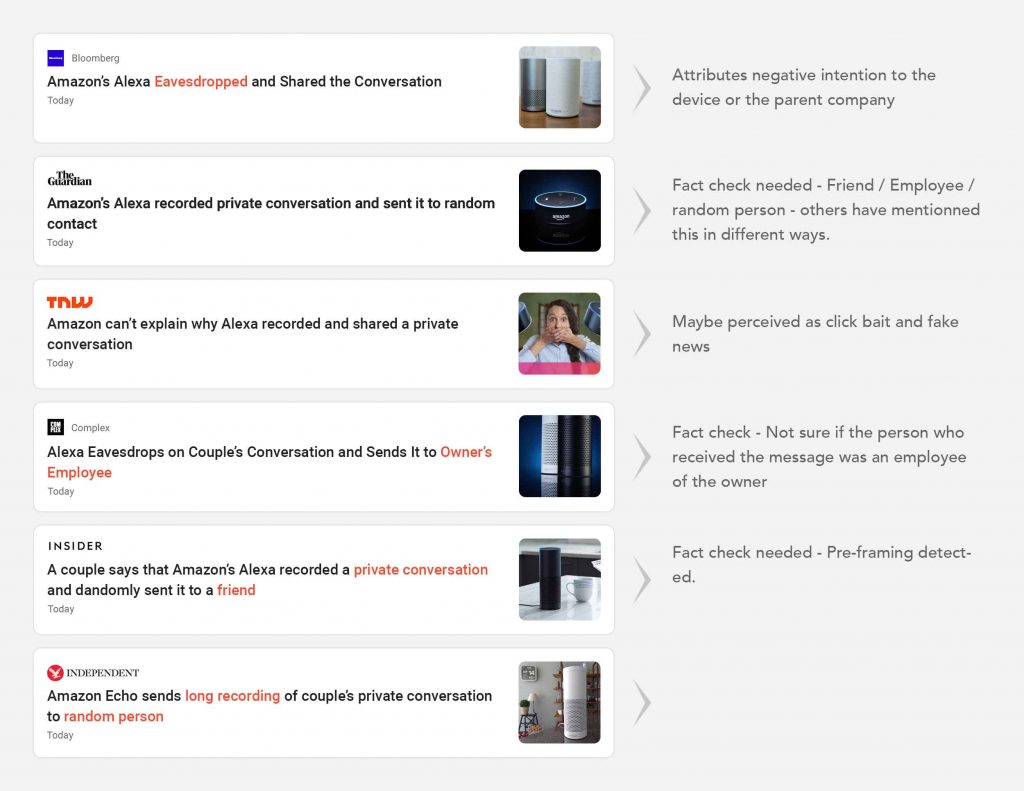

For example, to the journalists who wrote this headline about Alexa, the tool could point out certain things like this.

What is required is an AI-powered lens that will make visible the inherent biases and enable all of us to see more clearly.

Related Posts: